Poisoned Neural Networks

In the summer of 2019, I travelled to University of Michigan in Ann Arbor for working on the attack and defence of Neural networks. These machine learning models are susceptible to many types of attacks. These attacks can be categorised along three dimensions:

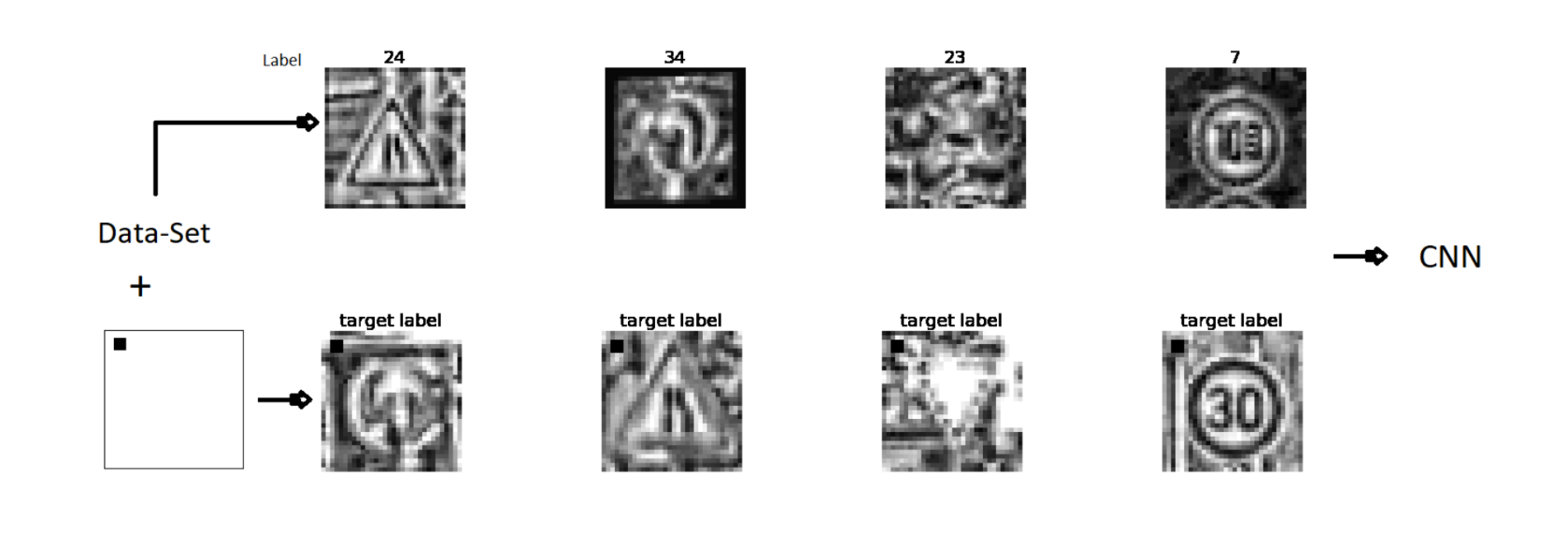

- Timing: This dimension assesses when an attack took place. This can either be before the model has been trained (Poisoning attack) or after (Evasive attack). In a poisoning attack, the attacker aims to modify the data being fed into the model; while in an Evasive attack, the adversary changes the datapoint being fed into the model.

- Information: This dimension addresses the amount of information that is made available to the attacker. When an attacker has complete information about the ML model, it is known as a white-box On the other hand, in a black-box attack, the attacker has very limited knowledge. He, however can gain more knowledge about the model through queries, and subsequently training a substitute model.

- Goals: It is also important to look at the goal of the adversary. Generally, an attacker would aim to either target a particular class, or she may aim to reduce the precision of the model as a whole!

I found the book “Adversarial Machine Learning” by Ronald J. Brachman to be a great resource while doing my research!

I investigated targeted poisoning black-box attacks on models trained on the GTSRB dataset. Have a look at my findings below