Honours Project: Acoustic Perception

Autonomous vehicles primarily rely on cameras, LIDAR, and RADAR to detect visible objects. However, these sensors face limitations in obstructed urban settings, where narrow streets, parked cars, and buildings block line-of-sight. Critically, they may fail to detect approaching emergency vehicles or hidden road users in time.

With Acoustic perception, we enable vehicles to detect and locate sounds like sirens, horns, and tire, engine noise that is generated by other traffic participants and emergency vehicles. Our solution operates beyond line-of-sight and enhances safety and situational awareness for self-driving systems.

Approach

We strategically place 32 microphones on the car such that there are 8 microphones along each side. This arrangement ensures $360^o$ spatial coverage around the vehicle and offers several benefits: it provides uniform beamforming resolution in all directions, avoids occlusion of LiDAR measurements, and positions the camera centrally within the array.

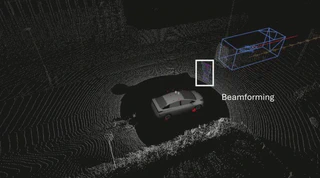

Built on ROS & Autoware, our setup simultaneously records data from LiDAR, camera, and microphone array. We ensure that the collected data is temporally and spatially synchronized. We test the synchronicity of this data using a traditional Delay-and-Sum beamforming algorithm to estimate the direction of latent traffic noise. This beamforming algorithm detects sound pressure in an arbitrarily chosen circular grid around the car. The videos below show the live performance of the system in the real-world.

(For both videos, only the points where sound pressure is detected are shown. Colour indicates scale of the detected sound pressure)Dataset

This project highlights the potential of sound as a powerful sensing modality for detecting and localizing traffic participants beyond the visual line-of-sight. When combined with data from conventional sensors, such as LiDAR and cameras, it opens new possibilities for robust perception in autonomous systems.

We created a pipeline that uses LiDAR data to label Microphone array recordings. Specifically, we use the CenterPoint ML model on the LiDAR data to detect and locate objects in a particular scene. These detections can then be used on the recorded audio data for training machine learning models on SELD tasks. Using this automated pipeline, we have recorded a dataset consisting of 35000 data points and creating, to our knowledge, the first automotive dataset combining these three sensor modalities.